Artificial intelligence is no longer just a futuristic idea—it is powering real-world AI applications across industries. From diagnosing medical conditions to predicting financial risks, AI is changing how decisions are made. But as these systems become more influential, one challenge remains: trust. Can we rely on results if we don’t understand how they were produced? This is where explainability becomes critical for building transparent AI systems.

Why Explainability Matters in Transparent AI Systems

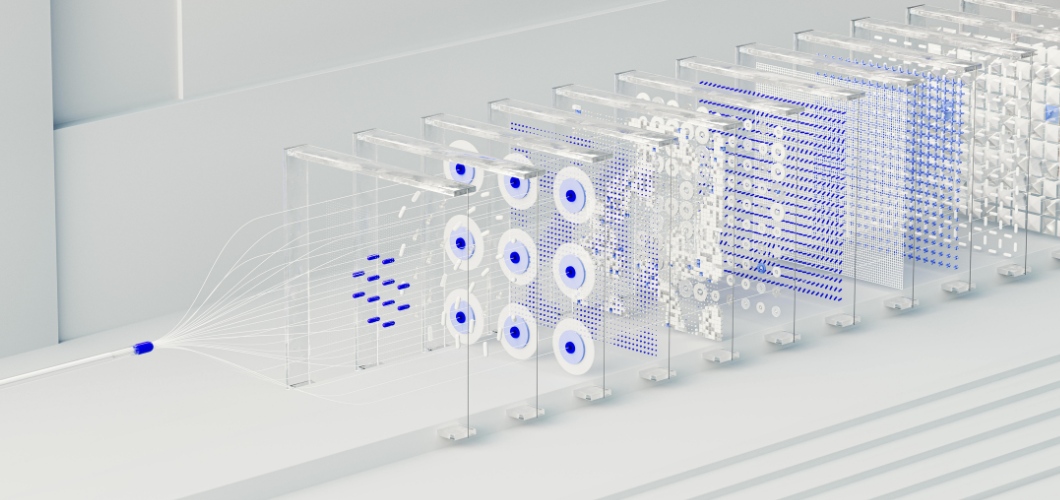

At the core of many advanced AI solutions lies the neural network, a system modeled after the human brain. While neural networks excel at detecting patterns and making accurate predictions, they are often seen as “black boxes.” Users rarely know how an input leads to a particular output. This lack of visibility can create hesitation, especially in high-stakes areas like healthcare, law, or finance. For AI to be transparent and ethical, explainability must be prioritized.

Also Read: Does AI-Generated Art Diminish the Value of Human Creativity

Strategies to Improve Neural Network Explainability

Visualization Techniques

Tools like saliency maps and attention heatmaps highlight which data points influenced the neural network’s decisions, offering more clarity.

Post-Hoc Analysis

Methods such as SHAP (SHapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) break down predictions into understandable factors, helping users trace outputs.

Simplified Hybrid Models

Combining neural networks with interpretable models, such as decision trees, allows businesses to balance complexity with readability.

Feature Importance Tracking

By identifying which variables are most influential, organizations can validate results and detect potential biases within AI applications.

Building Trust Through Transparency

Transparent AI systems are not only about compliance with regulations—they are about building confidence. When businesses adopt explainable AI practices, users feel more comfortable relying on results. Transparency also helps reduce bias, ensures accountability, and supports ethical decision-making. In short, explainability strengthens trust in both the technology and the organization deploying it.

The Future of Transparent AI Systems

As AI continues to evolve, explainability will play a central role in its growth. Regulators are demanding clarity, and consumers are expecting fairness. Organizations that invest in explainable neural networks will not only meet these requirements but also set themselves apart as leaders in responsible innovation.